Microsoft finds itself embroiled in controversy yet again due to an AI mishap, highlighting the continued challenges around responsibly implementing artificial intelligence. The tech giant is facing backlash after an AI-generated poll with insensitive and speculative options appeared alongside a news article about the tragic death of a young woman.

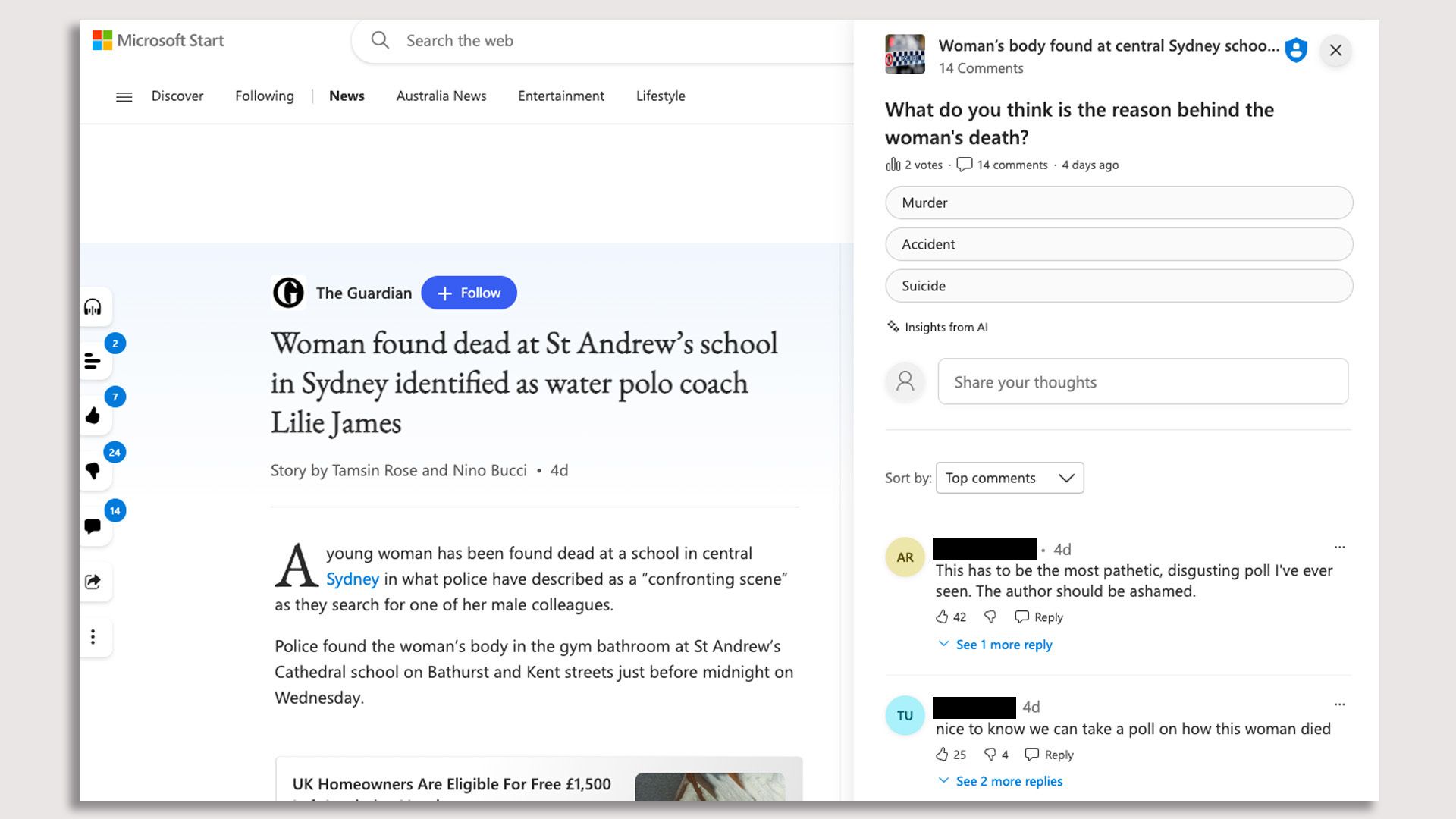

The article in question was published by the Guardian and covered the disturbing discovery of 21-year-old Lilie James, a water polo coach who was found dead with serious head injuries at a high school in Sydney. The AI poll that appeared next to the story on Microsoft’s Start news aggregation platform asked readers to speculate on the cause of James’ death, giving options like “murder,” “suicide,” or “accident.”

The article in question was published by the Guardian and covered the disturbing discovery of 21-year-old Lilie James, a water polo coach who was found dead with serious head injuries at a high school in Sydney. The AI poll that appeared next to the story on Microsoft’s Start news aggregation platform asked readers to speculate on the cause of James’ death, giving options like “murder,” “suicide,” or “accident.”

Publisher Decries “Inappropriate Use of AI”

The Guardian Media Group issued a stern rebuke of Microsoft over the AI poll, saying it caused “significant reputational damage” to the publisher and the journalist. Guardian CEO Anna Bateson wrote a letter to Microsoft President Brad Smith, arguing the poll was an “inappropriate use of genAI by Microsoft on a potentially distressing public interest story.”

Bateson contended the speculative poll was insensitive to James’ grieving family and also damaged the Guardian’s reputation, as some readers wrongly assumed the media outlet was responsible for creating it. She called on Microsoft to take “full responsibility” for the blunder and be transparent about its use of AI on news content. The publisher also urged Microsoft to halt applying experimental AI technologies alongside Guardian stories without consent.

Microsoft Apologizes, Disables AI Polls

Following the intense criticism, Microsoft issued an apology and said it is probing the cause of the “inappropriate content.” The company disabled AI-generated polls for all news articles on its platforms and promised steps to prevent similar errors in the future.

Following the intense criticism, Microsoft issued an apology and said it is probing the cause of the “inappropriate content.” The company disabled AI-generated polls for all news articles on its platforms and promised steps to prevent similar errors in the future.

“A poll should not have appeared alongside an article of this nature, and we are taking steps to help prevent this kind of error from reoccurring in the future,” a Microsoft spokesperson stated.

Continuing Challenges Around AI Ethics

The disturbing incident highlights the work still needed to ensure AI is deployed ethically, particularly when generating or interacting with news content. While AI has made strides in recent years, models can still create offensive, speculative, or insensitive content if not properly monitored and controlled.

“This is an inappropriate use of genAI by Microsoft on a potentially distressing public interest story, originally written and published by Guardian journalists,” Bateson wrote in her letter rebuking the tech company.

The autonomous creation of polls with speculative options around a woman’s tragic death underscores the potential real-world harm from the irresponsible use of AI. It also shows AI’s limitations in comprehending nuanced, ethical considerations around sensitive subjects.

Calls for Greater Oversight

The tech industry is facing growing calls to ensure AI models act responsibly and safely when deployed in real environments. Some experts argue there should be greater oversight around news-related AI, including utilizing human moderators.

There are also increased demands for tech platforms to be transparent when AI is used to generate content, so the public understands what was created by machines versus humans. The Guardian suggested Microsoft should have disclosed the poll was AI-generated.

While AI governance remains a complex challenge, Microsoft’s misstep stands as a prime example of what can go wrong without proper ethics policies and safeguards in place. The company is now tasked with preventing similar AI blunders as it works to build trust and reassure the public.

The controversial poll regretfully illustrates that while AI has made significant progress, it does not yet fully understand the nuances and sensitivities vital for navigating complex real-world issues. Ensuring AI models act ethically remains an urgent priority for tech companies as the technology becomes more advanced and widely deployed.